Utility Meter Data Analytics on the AWS Cloud

Quick Start Reference Deployment

October 2020

Avneet Singh, Juan Yu, Sascha Rodekamp, Tony Bulding, and Shivansh Singh, AWS

| Visit our GitHub repository for source files and to post feedback, report bugs, or submit feature ideas for this Quick Start. |

This Quick Start was created by Amazon Web Services (AWS). Quick Starts are automated reference deployments that use AWS CloudFormation templates to deploy key technologies on AWS, following AWS best practices.

Overview

This guide provides instructions for deploying the Utility Meter Data Analytics Quick Start reference architecture on the AWS Cloud.

This Quick Start deploys a platform that uses machine learning (ML) to help you analyze data from smart utility meters. It’s for utility companies or other organizations that are looking to gain insights from smart-meter data. This data comes from meter data management (MDM) or similar systems. Insights include unusual energy usage, energy-usage forecasts, and meter-outage details.

Except for Amazon Redshift, this is a serverless architecture.

Utility Meter Data Analytics on AWS

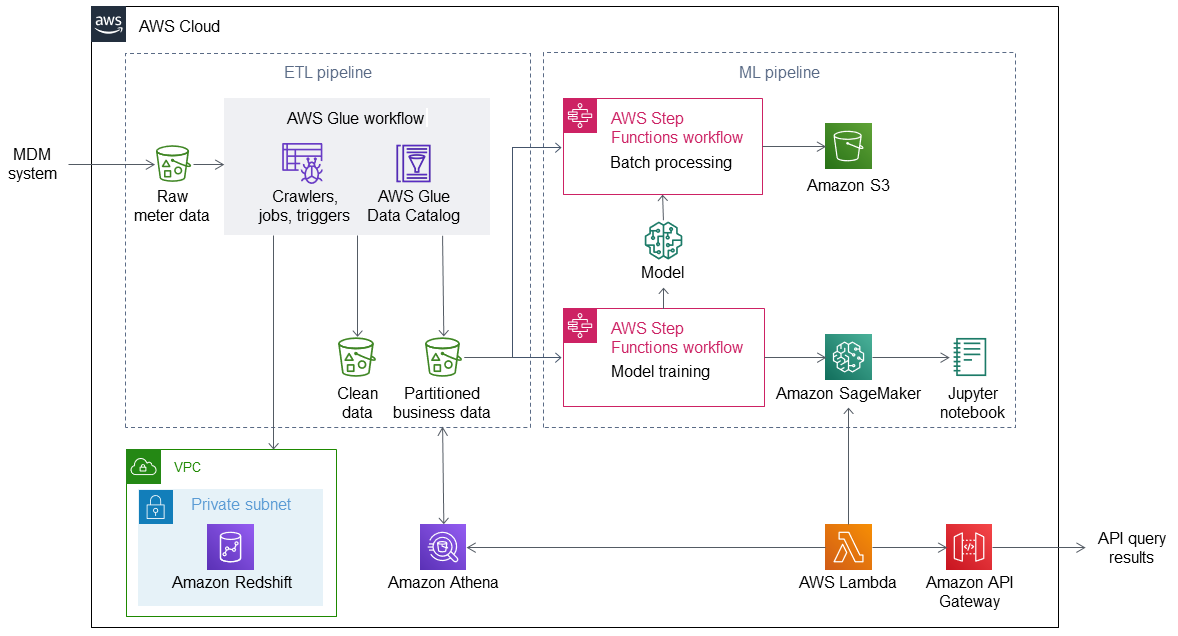

The Utility Meter Data Analytics Quick Start deploys a serverless architecture to ingest, store, and analyze utility-meter data. It creates an AWS Glue workflow, which consists of AWS Glue triggers, crawlers, and jobs as well as the AWS Glue Data Catalog. This workflow converts raw meter data into clean data and partitioned business data.

Next, this partitioned data enters an ML pipeline, which uses AWS Step Functions and Amazon SageMaker. First, an AWS Step Functions model-training workflow creates an ML model. Then another AWS Step Functions workflow uses the ML model to batch process the meter data. The processed data becomes the basis for forecasting energy usage, detecting usage anomalies, and reporting on meter outages. The Quick Start provides a Jupyter notebook that you can use to do data science and data visualizations on a provided sample dataset or run queries on your own meter data.

This Quick Start also creates application programming interface (API) endpoints using Amazon API Gateway. API Gateway provides REST APIs that deliver the query results.

AWS costs

You are responsible for the cost of the AWS services and any third-party licenses used while running this Quick Start. There is no additional cost for using the Quick Start.

The AWS CloudFormation templates for Quick Starts include configuration parameters that you can customize. Some of the settings, such as the instance type, affect the cost of deployment. For cost estimates, see the pricing pages for each AWS service you use. Prices are subject to change.

| After you deploy the Quick Start, create AWS Cost and Usage Reports to deliver billing metrics to an Amazon Simple Storage Service (Amazon S3) bucket in your account. These reports provide cost estimates based on usage throughout each month and aggregate the data at the end of the month. For more information, see What are AWS Cost and Usage Reports? |

Software licenses

This Quick Start doesn’t require any software license or AWS Marketplace subscription.

Architecture

Deploying this Quick Start for a new virtual private cloud (VPC) with default parameters builds the following Utility Meter Data Analytics environment in the AWS Cloud.

As shown in Figure 1, the Quick Start sets up the following:

-

A VPC configured with a private subnet, according to AWS best practices, to provide you with your own virtual network on AWS.*

-

In the private subnet, an Amazon Redshift cluster that stores business data for analysis, visualization, and dashboards.

-

An extract, transform, load (ETL) pipeline:

-

S3 buckets to store data from an MDM or similar system. Raw meter data, clean data, and partitioned business data are stored in separate S3 buckets.

-

An AWS Glue workflow:

-

Crawlers, jobs, and triggers to crawl, transform, and convert incoming raw meter data into clean data in the desired format and partitioned business data.

-

Data Catalog to store metadata and source information about the meter data.

-

-

-

An ML pipeline:

-

Two AWS Step Functions workflows:

-

Batch processing, which uses the partitioned business data and the data from the model as a basis for forecasting.

-

Model training, which uses the partitioned business data to build an ML model.

-

-

Amazon S3 for storing the processed data.

-

Amazon SageMaker for real-time forecasting of energy usage.

-

A Jupyter notebook with sample code to perform data science and data visualization.

-

-

AWS Lambda to query the partitioned business data through Amazon Athena or invoke SageMaker to provide API query results.

-

Amazon API Gateway to deliver API query results for energy usage, anomalies, and meter outages.

*The template that deploys the Quick Start into an existing VPC skips the components marked by asterisks and prompts you for your existing VPC configuration.

Planning the deployment

Specialized knowledge

This deployment requires a moderate level of familiarity with AWS services. If you’re new to AWS, see Getting Started Resource Center and AWS Training and Certification. These sites provide materials for learning how to design, deploy, and operate your infrastructure and applications on the AWS Cloud.

Assumptions

This Quick Start assumes the following:

-

Meter data has already been validated and processed by the MDM system.

-

Weather data, while not required, is recommended because it produces insights when correlated with unusual energy usage.

-

Data from a customer information system (CIS), while not required, is recommended because it produces insights related to customer energy usage.

AWS account

If you don’t already have an AWS account, create one at https://aws.amazon.com by following the on-screen instructions. Part of the sign-up process involves receiving a phone call and entering a PIN using the phone keypad.

Your AWS account is automatically signed up for all AWS services. You are charged only for the services you use.

Technical requirements

Before you launch the Quick Start, review the following information and ensure that your account is properly configured. Otherwise, deployment might fail.

Resource quotas

If necessary, request service quota increases for the following resources. You might need to request increases if your existing deployment currently uses these resources and if this Quick Start deployment could result in exceeding the default quotas. The Service Quotas console displays your usage and quotas for some aspects of some services. For more information, see What is Service Quotas? and AWS service quotas.

| Resource | This deployment uses |

|---|---|

VPCs |

1 |

AWS Identity and Access Management (IAM) roles |

7 |

Amazon S3 buckets |

6 |

AWS Glue crawlers |

4 |

AWS Glue jobs |

3 |

Amazon SageMaker notebook instances |

1 |

Amazon SageMaker endpoints |

1 |

AWS Lambda functions |

9 |

Amazon API Gateway endpoints |

1 |

Amazon Redshift instances |

1 |

AWS Step Functions state machines |

3 |

Deploying the MRASCo data adapter option adds the following resources:

| Resource | Additional adapter resources |

|---|---|

Amazon S3 buckets |

1 |

AWS Lambda functions |

1 |

Supported AWS Regions

For any Quick Start to work in a Region other than its default Region, all the services it deploys must be supported in that Region. You can launch a Quick Start in any Region and see if it works. If you get an error such as “Unrecognized resource type,” the Quick Start is not supported in that Region.

For an up-to-date list of AWS Regions and the AWS services they support, see AWS Regional Services.

| Certain Regions are available on an opt-in basis. For more information, see Managing AWS Regions. |

IAM permissions

Before launching the Quick Start, you must sign in to the AWS Management Console with IAM permissions for the resources that the templates deploy. The AdministratorAccess managed policy within IAM provides sufficient permissions, although your organization may choose to use a custom policy with more restrictions. For more information, see AWS managed policies for job functions.

Prepare for the deployment

To see the full capabilities of this Quick Start, make sure you have a sample dataset for utility meters from an MDM system. Optionally, to gain better insights, we recommend that you also have weather data, geolocation data, and CIS data corresponding to the utility meters.

For details on how to download and use a sample dataset with this Quick Start, see the Post-deployment steps section of this deployment guide.

Deployment options

This Quick Start creates a serverless architecture in your AWS account. As part of the deployment, it creates an Amazon Redshift cluster, which is created in a private subnet within a VPC.

For deployment of the Amazon Redshift cluster, this Quick Start provides two deployment options:

-

Deploy Utility Meter Data Analytics into a new VPC. This option builds a new AWS environment consisting of the VPC, the subnet, and other infrastructure components. It then deploys an Amazon Redshift cluster into the new VPC.

-

Deploy Utility Meter Data Analytics into an existing VPC. This option provisions an Amazon Redshift cluster in your existing AWS infrastructure.

The Quick Start provides separate templates for these options. It also lets you configure Classless Inter-Domain Routing (CIDR) blocks, the number of digital processing units (DPUs) for AWS Glue jobs, and the source S3 bucket, as discussed later in this guide.

Deployment steps

Sign in to your AWS account

-

Sign in to your AWS account at https://aws.amazon.com with an IAM user role that has the necessary permissions. For details, see Planning the deployment earlier in this guide.

-

Make sure that your AWS account is configured correctly, as discussed in the Technical requirements section.

Launch the Quick Start

| You are responsible for the cost of the AWS services used while running this Quick Start reference deployment. There is no additional cost for using this Quick Start. For full details, see the pricing pages for each AWS service used by this Quick Start. Prices are subject to change. |

-

Sign in to your AWS account, and choose one of the following options to launch the AWS CloudFormation template. For help with choosing an option, see Deployment options earlier in this guide.

Deploy Utility Meter Data Analytics into an existing VPC on AWS |

| If you’re deploying Utility Meter Data Analytics into an existing VPC, make sure that your VPC has two private subnets in different Availability Zones for the workload instances and that the subnets aren’t shared. This Quick Start doesn’t support shared subnets. These subnets require network address translation (NAT) gateways in their route tables so that the instances can download packages and software without exposing them to the internet. Also make sure that the domain name option in the DHCP options is configured as explained in DHCP options sets. You provide your VPC settings when you launch the Quick Start. |

Each deployment takes about 30 minutes to complete.

-

Check the AWS Region that’s displayed in the upper-right corner of the navigation bar, and change it if necessary. This is where the network infrastructure for Utility Meter Data Analytics is built. The template is launched in the us-east-1 Region by default.

-

On the Create stack page, keep the default setting for the template URL, and then choose Next.

-

On the Specify stack details page, change the stack name if needed. Review the parameters for the template. Provide values for the parameters that require input. For all other parameters, review the default settings and customize them as necessary. For details on each parameter, see the Parameter reference section of this guide.

-

On the Configure stack options page, you can specify tags (key-value pairs) for resources in your stack and set advanced options. When you finish, choose Next.

-

On the Review page, review and confirm the template settings. Under Capabilities, select the two check boxes to acknowledge that the template creates IAM resources and might require the ability to automatically expand macros.

-

Choose Create stack to deploy the stack.

-

Monitor the status of the stack. When the status is CREATE_COMPLETE, the Utility Meter Data Analytics deployment is ready.

-

To view the created resources, see the values displayed in the Outputs tab for the stack.

MRASCo data adapter option

This Quick Start features a MRASCo data adapter option to transform MRASCo data flow files to MDA landing zone format. To deploy this feature, choose mrasco for the DeploySpecialAdapters parameter during deployment. The MRASCo data adapter option deploys the following resources:

-

An S3 bucket to store MRASCo data files. The S3 bucket is configured with an agent to invoke an AWS Lambda function when files are uploaded.

-

An AWS Lambda function to retrieve MRASCo data files from the S3 bucket and transform them to MDA landing zone format.

Postdeployment steps

To see the capabilities of the QuickStart, follow the steps in this section to use the dataset of meter reads from the City of London between 2011 and 2014. The Customize this Quick Start section explains how to edit the raw-data ETL script to work with your own meter data.

Download demo dataset

To use the demo dataset, you must set the LandingzoneTransformer parameter to London during Quick Start deployment.

|

Download the sample dataset from SmartMeter Energy Consumption Data in London Households. The file can be downloaded to your local machine, unzipped, and then uploaded to Amazon S3. However, given the size of the unzipped file (~11 GB), it’s faster to run an EC2 instance with sufficient permissions to write to Amazon S3, download the file to the EC2 instance, unzip it, and upload the data from there.

Mirror: Download from kaggle

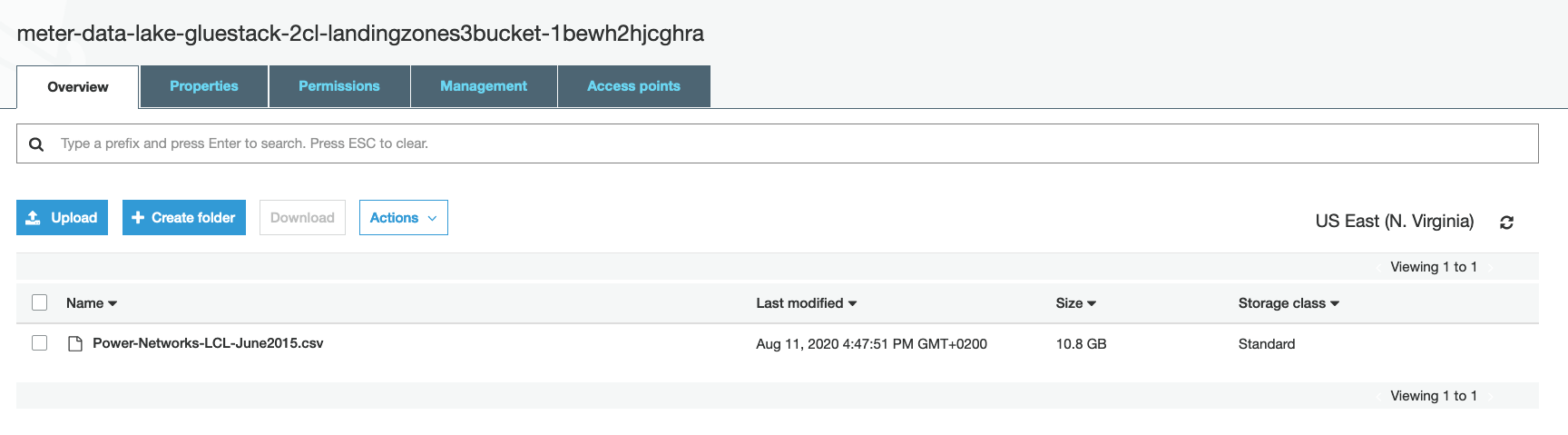

Upload the dataset to the landing-zone S3 bucket

You must upload the sample dataset to the landing-zone (raw-data) S3 bucket. The landing zone is the starting point for the AWS Glue workflow. Files that are placed in this S3 bucket are processed by the ETL pipeline. Furthermore, the AWS Glue extract, transform, and load (ETL) workflow tracks which files have been processed and which have not.

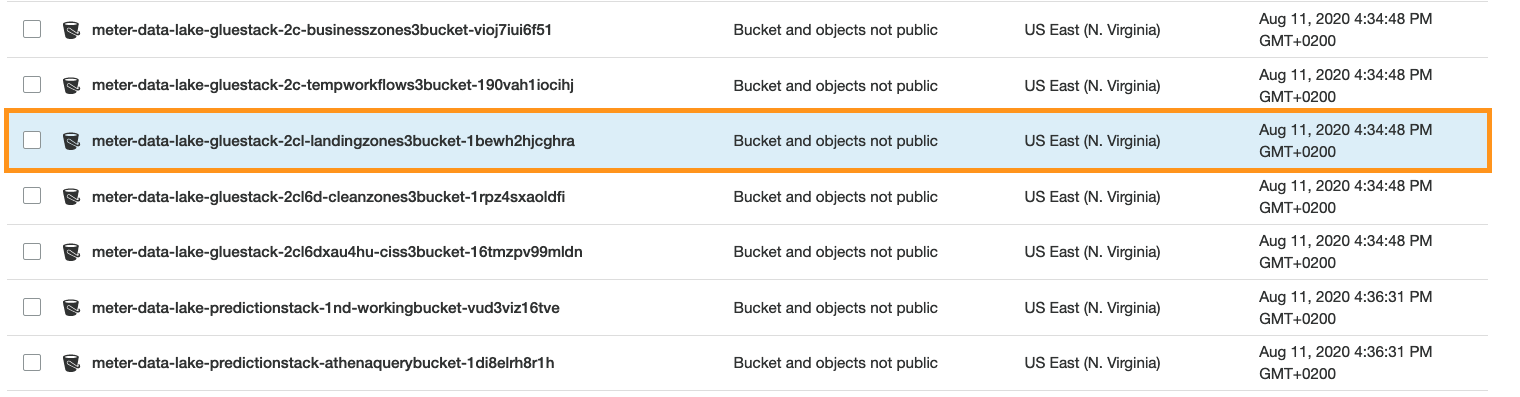

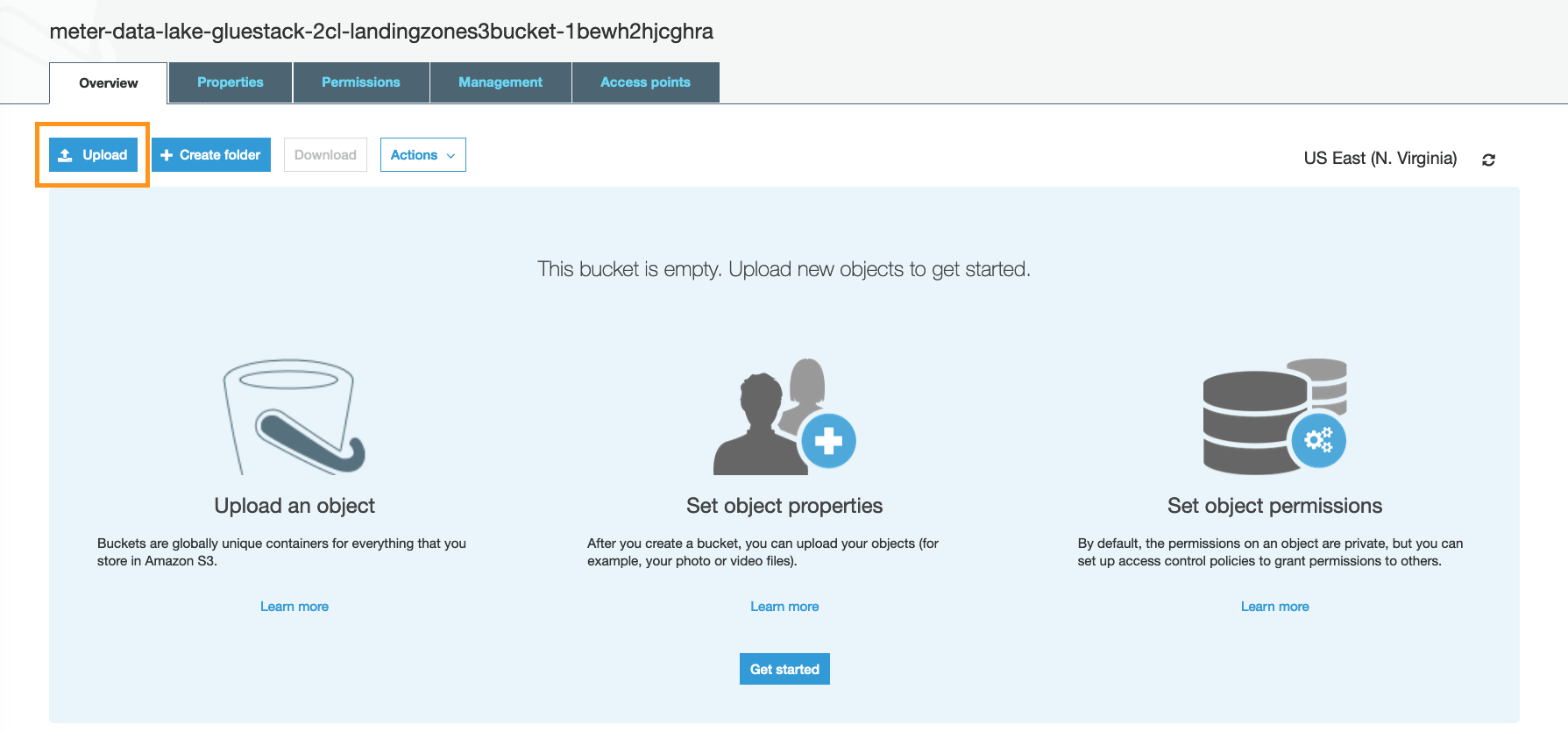

To upload the sample dataset to the landing-zone S3 bucket, follow these steps:

-

Choose the S3 bucket with the name that contains

landingzones. This is the starting point for the ETL process. -

Upload the London meter data to the landing-zone bucket.

-

Verify that the directory contains one file with meter data and no subdirectories.

| The ETL workflow fails if the S3 bucket contains subdirectories. |

(Optional) Prepare weather data for use in training and forecasting

-

Download the sample weather dataset.

-

Upload the dataset to a new S3 bucket. The bucket can have any name. You must reference the bucket in a SQL statement in the next step.

-

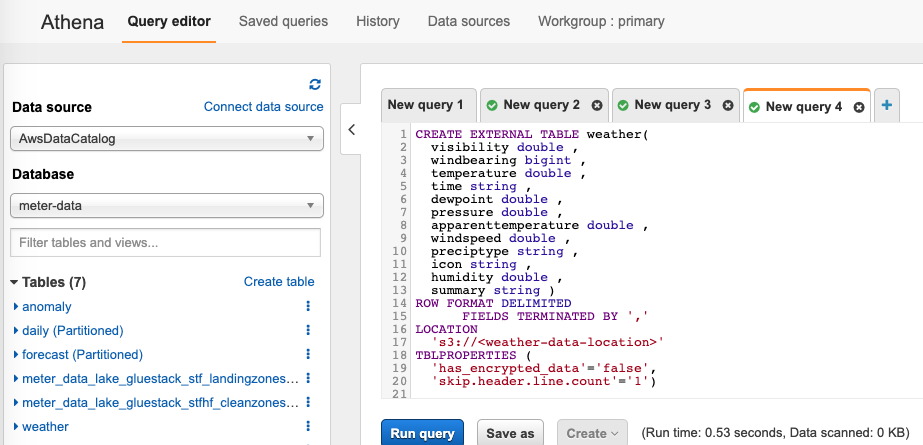

Create a weather table in the target AWS Glue database using the SQL statement in Figure 5. Replace

<weather-data-location>in the code with the location of the S3 bucket with the weather dataset.CREATE EXTERNAL TABLE weather( visibility double, windbearing bigint, temperature double, time string, dewpoint double, pressure double, apparenttemperature double, windspeed double, preciptype string, icon string, humidity double, summary string ) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LOCATION 's3://<weather-data-location>/' TBLPROPERTIES ( 'has_encrypted_data'='false', 'skip.header.line.count'='1');

If you have another weather dataset you want to use, verify that it contains at least the four columns shown in Table 1.

| Field | Type | Mandatory | Format |

|---|---|---|---|

time |

string |

yes |

|

temperature |

double |

yes |

|

apparenttemperature |

double |

yes |

|

humidity |

double |

yes |

To enable the use of weather data, set the WithWeather parameter to 1 during Quick Start deployment.

|

(Optional) Prepare geolocation data

This Quick Start requires geolocation data to be uploaded to the geodata directory in the business-zone S3 bucket. The name of this bucket appears in the Outputs section in the AWS CloudFormation stack. The S3 path of the geolocation data should be s3://<business-zone-s3-bucket-name>/geodata/. The data should be in a CSV file format with the following structure:

| Field Name | Field Type |

|---|---|

meter id |

string |

latitude |

string |

longitude |

string |

meter id, latitude, and longitude are mandatory. If data are missing, API requests for meter-outage information returns an error.

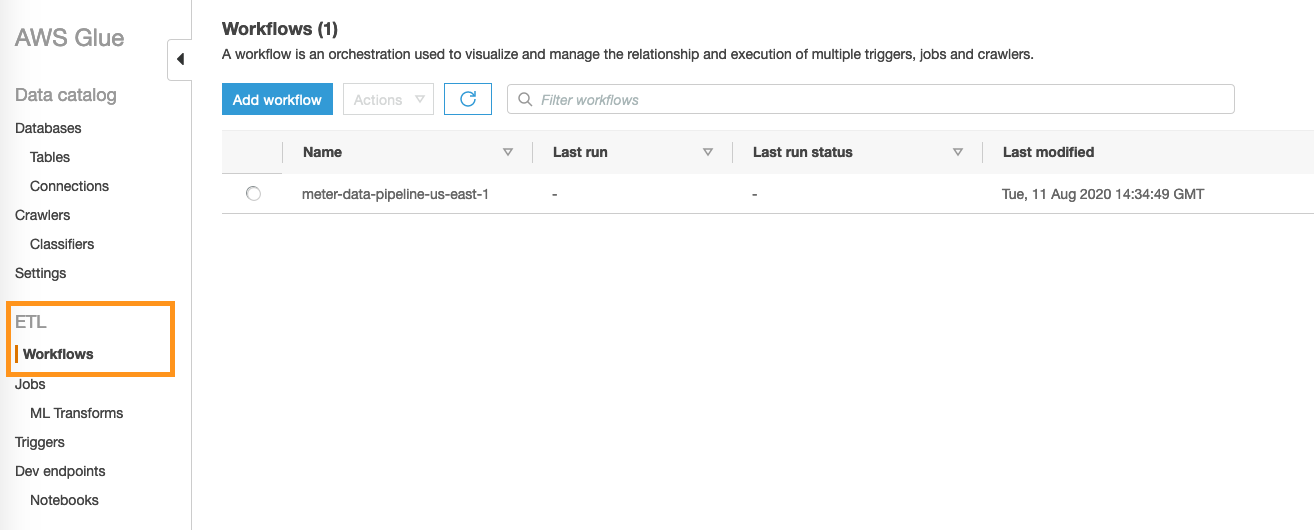

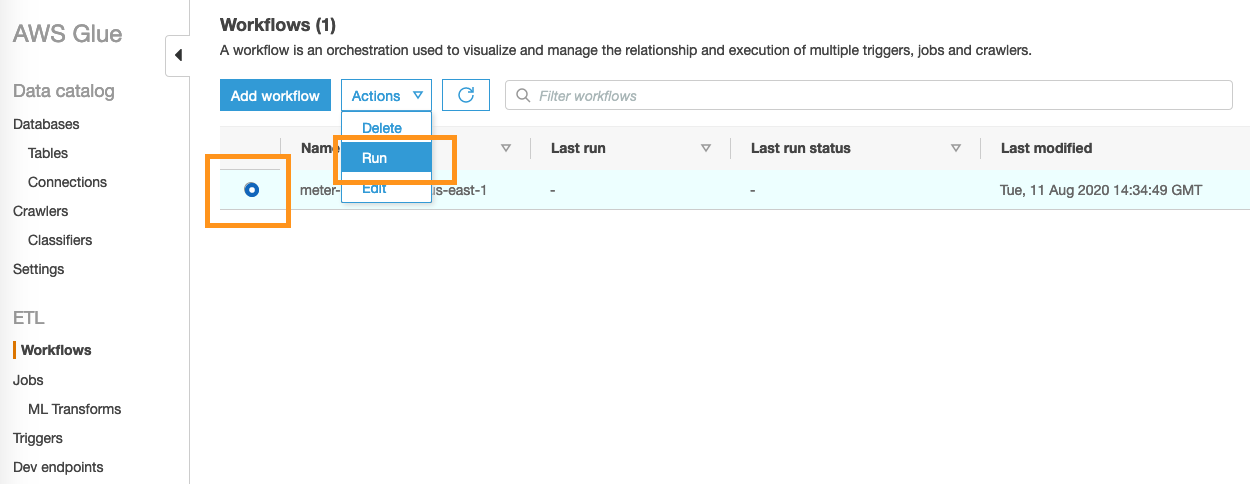

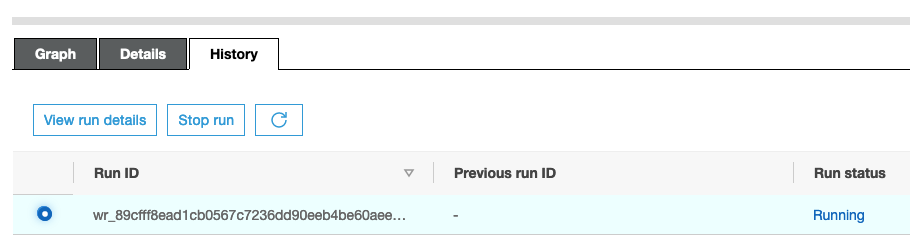

Start the AWS Glue ETL and ML training pipeline manually

By default, the pipeline is invoked each day at 9:00 a.m. to process newly arrived data in the landing-zone S3 bucket. To start the ETL pipeline manually, do the following:

Customize this Quick Start

You can customize this Quick Start for use with your own data format. To do that, adjust the first AWS Glue job to map the incoming data to the internal meter-data-lake schema. The following steps describe how.

The first AWS Glue job in the ETL workflow transforms the raw data in the landing-zone S3 bucket to clean data in the clean-zone S3 bucket. This step also maps the inbound data to the internal data schema, which is used by the rest of the steps in the AWS ETL workflow and the ML state machines.

To update the data mapping, you can edit the AWS Glue job directly in the web editor.

-

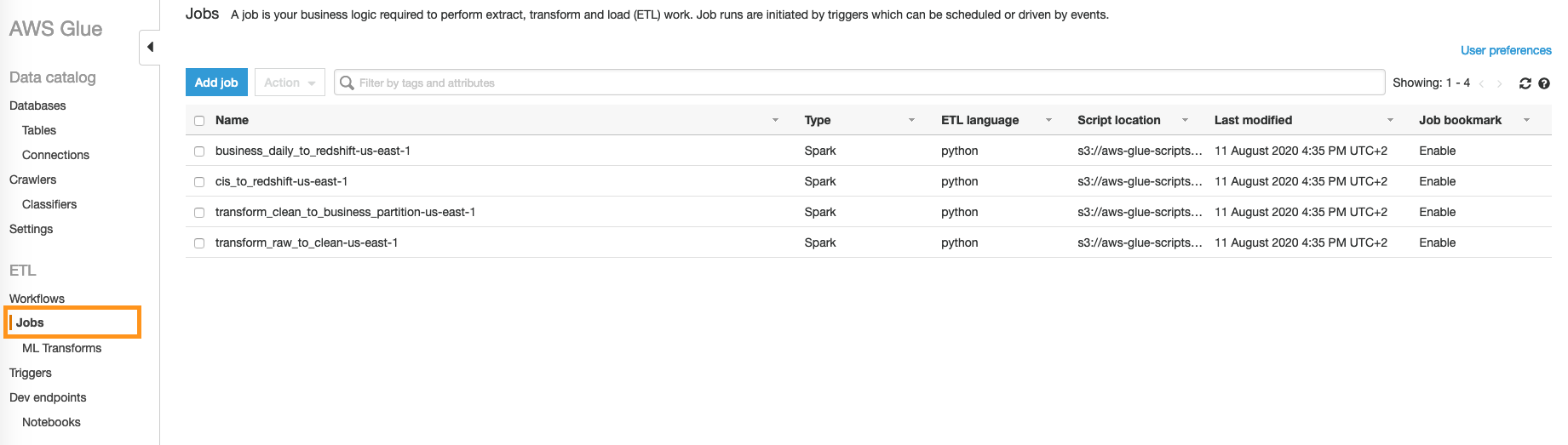

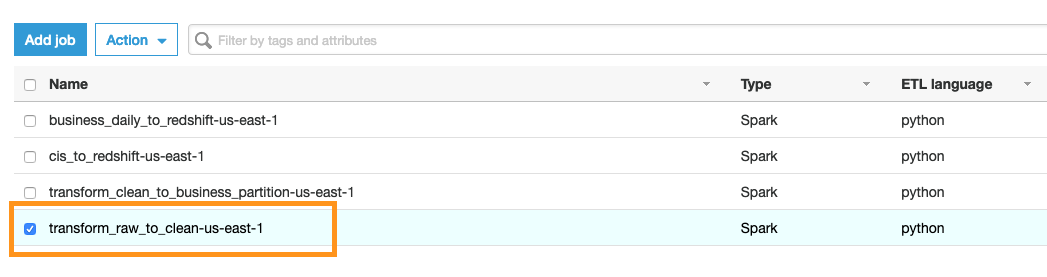

Open the AWS Glue console. Choose Jobs.

-

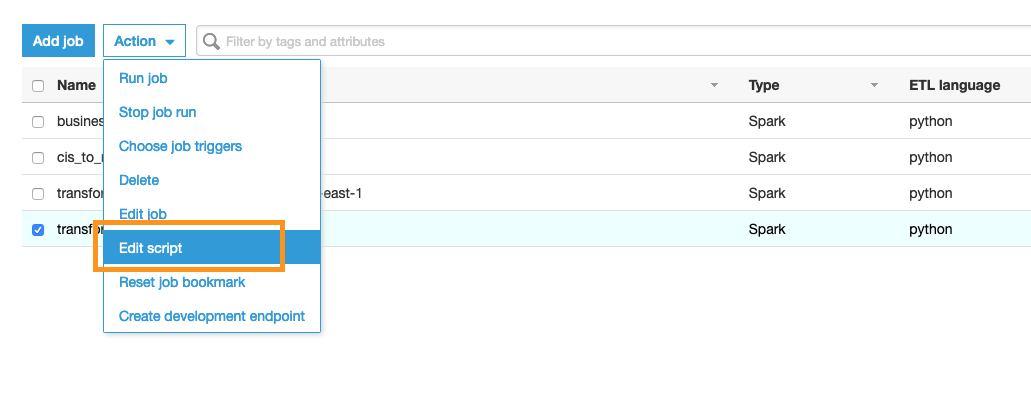

Select the ETL job

transform_raw_to_clean-. -

Choose Action, Edit script. Edit the input mapping in the script editor.

-

To adopt a different input format, edit the

ApplyMappingcall. The internal model works with the following schema:Table 3. Schema Field Type Mandatory Format meter_id

String

yes

reading_value

double

yes

0.000

reading_type

String

yes

AGG|INT

reading_date_time

Timestamp

yes

yyyy-MM-dd HH:mm:ss.SSS

date_str

String

yes

yyyyMMdd

obis_code

String

no

week_of_year

int

no

month

int

no

year

int

no

hour

int

no

minute

int

no

How to retrain the model or use a custom model in the pipeline

This Quick Start deploys an ML pipeline, including a model-training workflow and a batch-processing workflow. This pipeline generates data that becomes the basis for forecasts and anomaly results. The initial deployment runs both workflows after ETL jobs are done.

After new data is added, you can rerun the whole AWS CloudFormation to reprocess the entire data and train a new model. Alternatively, you might rerun each part at a different schedule, as in these examples:

-

Run the AWS Glue ETL workflow daily to process and format new data.

-

Run the batch workflow weekly to generate forecast and anomaly for new data.

-

Run the model-training workflow monthly to retrain the model to learn new customer-consumption patterns.

You can also use a custom model for batch pipeline and forecast API.

Run the batch prediction pipeline

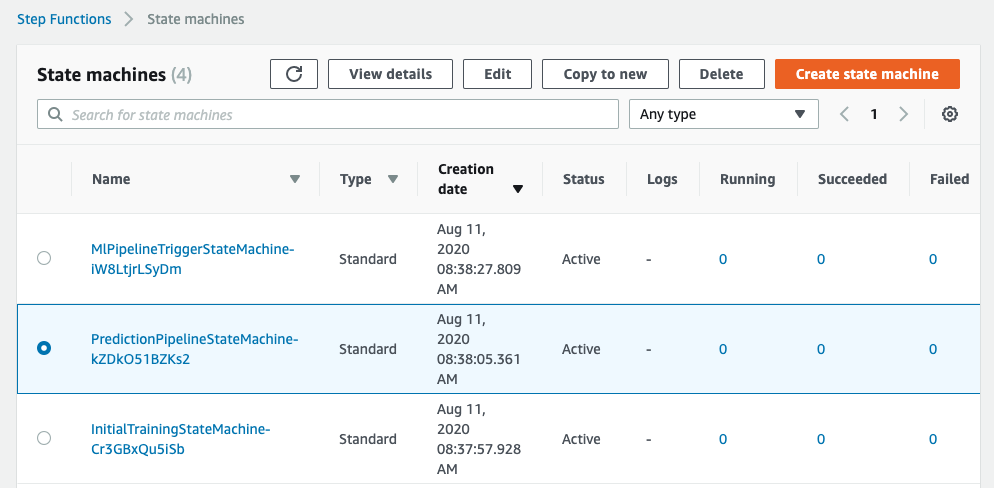

The batch pipeline is implemented by AWS Step Functions.

-

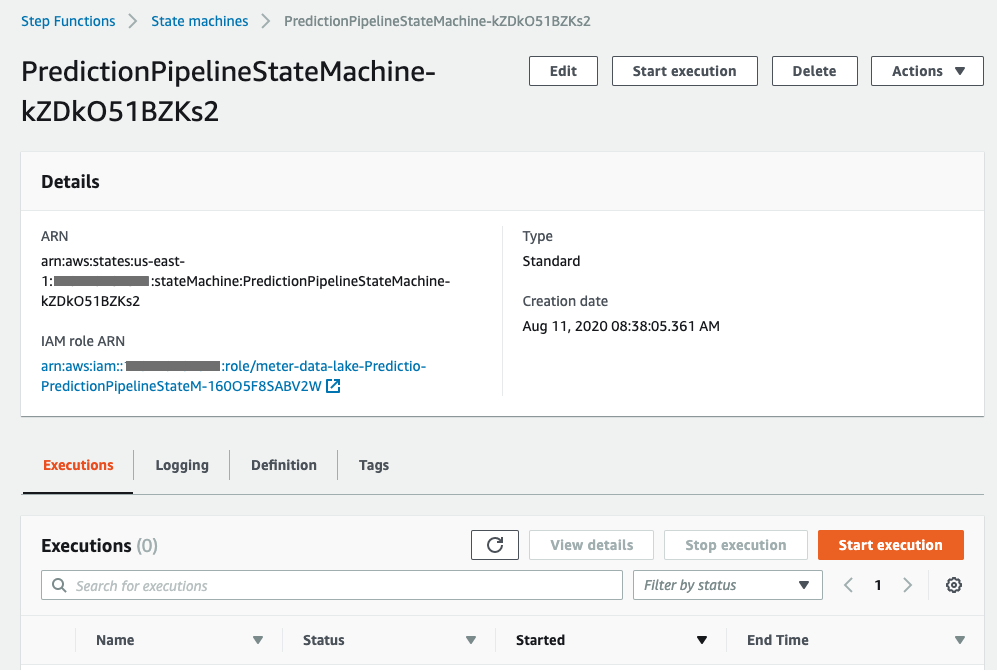

Open the Step Functions console. Find the state machine with the prefix

MachineLearningPipelinePreCalculationStateMachine-". -

Open the state machine, and choose Start execution.

The input parameter can be found in the DynamoDB table MachineLearningPipelineConfig. You can specify the range of meters you want to process, and the date range of your data. Note that Batch_size should not exceed 100.

Adjust the following values in the DynamoDB table as needed:

"Meter_start": 1,

"Meter_end": 50,

"Batch_size": 25,

"Data_start": "2013-01-01",

"Data_end": "2013-10-01",

"Forecast_period": 7Retrain the model with different parameters

-

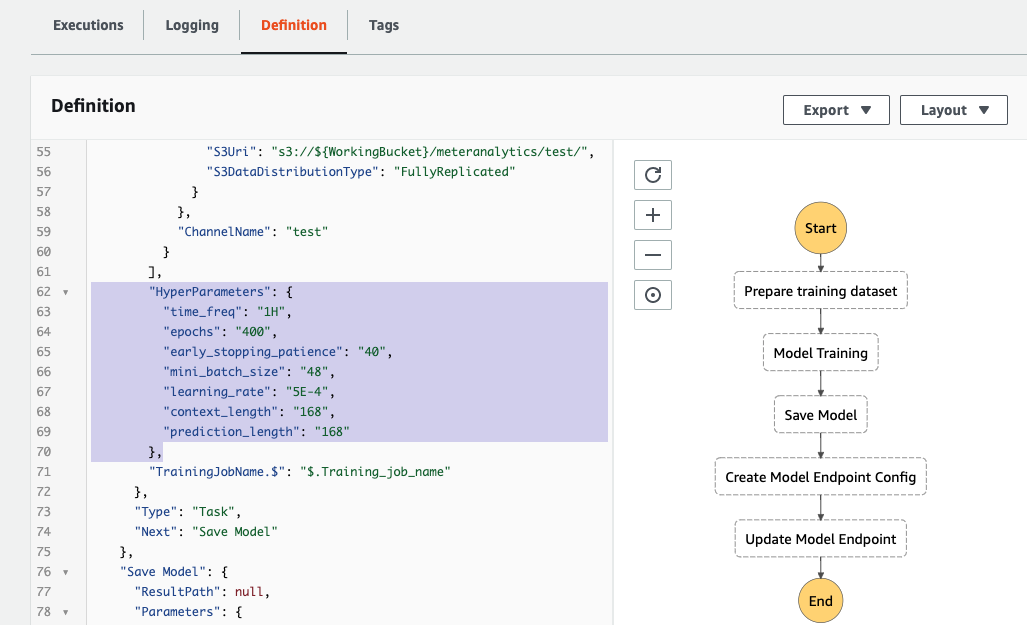

Open the Step Functions console. Find the state machine with prefix

MachineLearningPipelineModelTrainingStateMachine-. -

Open the state machine, and choose Definition.

-

In the

HyperParameterssection, modify or add more parameters. For more information, see DeepAR Hyperparameters. -

Save the change to the state machine, and choose Start execution.

The configuration will be loaded from the DynamoDB table MachineLearningPipelineConfig. The following parameters can be modified:

Training_samples specifies how many meter data will be used to train the model. The more meters that are used, the more accurate the model will be and the longer the training will take.

A new unique ModelName and ML_endpoint_name will be generated.

"Training_samples": 20,

"Data_start": "2013-01-01",

"Data_end": "2013-10-01",

"Forecast_period": 7Data schema and API I/O format

Data partitioning

The curated data in the business-zone S3 bucket is partitioned by reading type and reading date, as follows: s3://BusinessBucket/<reading_type_value>/<date>/<meter-data-file-in-parquet-format>.

You can find all meter reads for a day on the lowest level of the partition tree. To optimize query performance, the data is stored in a column-based file format (Parquet).

Late-arriving data

The data lake handles late-arriving data. If a meter sends data later, the ETL pipeline takes care of storing the late meter read in the correct partition.

Meter-data format

The input meter-data format is variable and can be adjusted as described in the section 'Customize this Quick Start'. The sample input data format of the London meter reads looks like the following:

| Field | Type | Format |

|---|---|---|

lclid |

string |

|

stdortou |

string |

|

datetime |

string |

yyyy-MM-dd HH:mm:ss.SSSSSSS |

kwh/hh (per half hour) |

double |

0.000 |

acorn |

string |

|

acorn_grouped |

string |

Table schemas

This Quick Start uses the following table schema for ETL jobs, ML model training, and API queries. This schema is also used in Amazon Redshift.

| Field | Type | Mandatory | Format |

|---|---|---|---|

meter_id |

String |

X |

|

reading_value |

double |

X |

0.000 |

reading_type |

String |

X |

AGG|INT* |

reading_date_time |

Timestamp |

X |

yyyy-MM-dd HH:mm:ss.SSS |

date_str |

String |

X |

yyyyMMdd |

obis_code |

String |

||

week_of_year |

int |

||

month |

int |

||

year |

int |

||

hour |

int |

||

minute |

int |

AGG = Aggregated reads

INT = Interval reads

Energy-usage forecast

The energy forecast endpoint returns a list of predicted consumptions based on the trained ML model.

| Parameter | Description |

|---|---|

meter_id |

The ID of the meter for which the forecast should be made. |

data_start |

Start date of meter reads that should be used a basis for the prediction. |

data_end |

End date of meter reads that should be used a basis for the prediction. |

ml_endpoint_name |

The ML endpoint created by SageMaker. |

https://xxxxxxxx.execute-api.us-east-1.amazonaws.com/forecast/<meter_id>?data_start=<>&data_end=<>

curl https://xxxxxxxx.execute-api.us-east-1.amazonaws.com/forecast/MAC004734?data_start=2013-05-01&data_end=2013-10-01{

"consumption":{

"1380585600000":0.7684248686,

"1380589200000":0.4294368029,

"1380592800000":0.3510326743,

"1380596400000":0.2964941561,

"1380600000000":0.3064994216,

"1380603600000":0.4563854337,

"1380607200000":0.6386958361,

"1380610800000":0.5963768363,

"1380614400000":0.5587928891,

"1380618000000":0.4409750104,

"1380621600000":0.4719932675

}

}Anomaly detection

The endpoint returns a list of energy-usage anomalies for a specific meter in a given time range.

| Parameter | Description |

|---|---|

meter_id |

The ID of the meter for which anomalies should be determined. |

data_start |

Start date of the anomaly detection. |

data_end |

End date of the anomaly detection. |

outlier_only |

Determines whether all data or only outliers should be returned for a certain meter. |

https://xxxxxxxx.execute-api.us-east-1.amazonaws.com/anomaly/<meter_id>?data_start=<>&data_end=<>&outlier_only=<>

curl https://xxxxxxxx.execute-api.us-east-1.amazonaws.com/anomaly/MAC000005?data_start=2013-01-01&data_end=2013-12-31&outlier_only=0The output returns a list of reading dates in which anomalies are marked on a daily basis.

{

"meter_id":{

"1":"MAC000005",

"2":"MAC000005"

},

"ds":{

"1":"2013-01-02",

"2":"2013-01-07"

},

"consumption":{

"1":5.7,

"2":11.436

},

"yhat_lower":{

"1":3.3661955822,

"2":3.5661772085

},

"yhat_upper":{

"1":9.1361769262,

"2":8.9443160402

},

"anomaly":{

"1":0,

"2":1

},

"importance":{

"1":0.0,

"2":0.217880724

}

}Meter-outage details

The endpoint returns a list of meters that delivered an error code instead of a valid reading. Data includes geographic information (latitude, longitude) which can be used to create a map of current meter outages; for example, to visualize an outage cluster.

| Parameter | Description |

|---|---|

error_code |

Error code which should be retrieved from the API. The parameter is optional. If not set, all errors in the timeframe will be returned. |

start_date_time |

Start of outage time frame. |

end_date_time |

End of outage time frame. |

https://xxxxxxxx.execute-api.us-east-1.amazonaws.com/outage?error_code=<>&start_date_time=<>&end_date_time=<>

curl https://xxxxxxxx.execute-api.us-east-1.amazonaws.com/outage?start_date_time=2013-01-03+09:00:01&end_date_time=2013-01-03+10:59:59Returns a list of meters that had an outage in the requested time range.

{

"Items":[

{

"meter_id":"MAC000138",

"reading_date_time":"2013-01-03 09:30:00.000",

"date_str":"20130103",

"lat":40.7177325,

"long":-74.043845

},

{

"meter_id":"MAC000139",

"reading_date_time":"2013-01-03 10:00:00.000",

"date_str":"20130103",

"lat":40.7177325,

"long":-74.043845

}

]

}Aggregation API

The aggregation endpoint returns aggregated readings for a certain meter on a daily, weekly, or monthly basis.

| Parameter | Description |

|---|---|

aggregation level |

Daily, weekly, or monthly aggregation level. |

year |

The year the aggregation should cover. |

meter_id |

The ID of the meter for which the aggregation should be done. |

https://xxxxxx.execute-api.us-east-1.amazonaws.com/consumption/<daily|weekly|monthly>/<year>/<meter_id>

curl https://xxxxxx.execute-api.us-east-1.amazonaws.com/consumption/daily/2013/MAC001595[

[

"MAC001595",

"20130223",

3.34

],

[

"MAC001595",

"20130221",

5.316

],

[

"MAC001595",

"20130226",

4.623

]

]Best practices

Use Amazon S3 Glacier to archive meter data from raw, clean, and business S3 buckets for long-term storage and cost savings.

FAQ

Q. I encountered a CREATE_FAILED error when I launched the Quick Start.

A. If AWS CloudFormation fails to create the stack, relaunch the template with Rollback on failure set to Disabled. This setting is under Advanced in the AWS CloudFormation console on the Configure stack options page. With this setting, the stack’s state is retained and the instance is left running so that you can troubleshoot the issue. (For Windows, look at the log files in %ProgramFiles%\Amazon\EC2ConfigService and C:\cfn\log.)

| When you set Rollback on failure to Disabled, you continue to incur AWS charges for this stack. Be sure to delete the stack when you finish troubleshooting. |

For additional information, see Troubleshooting AWS CloudFormation on the AWS website.

Q. I encountered a size-limitation error when I deployed the AWS CloudFormation templates.

A. Launch the Quick Start templates from the links in this guide or from another S3 bucket. If you deploy the templates from a local copy on your computer or from a location other than an S3 bucket, you might encounter template-size limitations. For more information, see AWS CloudFormation quotas.

Q. Does the Quick Start replace the existing MDMS from ISVs that utility companies have today?

A. Utility customers have expressed a need to break down data silos and bring greater value out of their data, specifically case meter data. They have asked for AWS best practices for storing meter data in a data lake, and for help getting started running analytics and ML against that data to derive value for their own customers. This Quick Start directly answers these needs. Utility commpanies can hydrate the AWS data lake with data from their existing systems and continue to evolve the Quick Start architecture to meet their business needs. Customers, solution providers, and system integrators can take advantage of this Quick Start to start applying ML against meter data in a matter of days.

Q. Does the Quick Start include a connectivity (topology) model?

A. No. However, S3 is an object store and can hold any type of data, so you can modify it to hold a copy of the topology model from a utilities Geographic Information System (GIS).

Q. What meter dataset is included with the Quick Start?

A. The Quick Start does not include meter data, but instead includes instructions on how to download a public dataset from the city of London, UK, including 5,567 households from November 2011 to February 2014.

Q. What weather data is included with the Quick Start?

A. The Quick Start does not include weather data, but instead includes instructions on how to download a public dataset. This weather data aligns with the geographical area and time period provided for London.

Q. Are any public datasets with large volumes of sample or anonymized smart meter data available?

A. Not at this time, although Amazon, via the Registry of OpenData on AWS (RODA) project, is currently researching options. Utility of Meter manufacturers can donate datasets for public use.

Q. Will the register and interval reads be taken into the data lake?

A. Yes. The data lake can take and process multiple reading types. Register and interval reads are stored in the data lake. However, only the interval data is used for the model training.

Q. Does the Quick Start include publicly available data about homes (for example, sqft) based on address?

A. No. Currently the Quick Start doesn’t include this information.

Q. How is the meter data structured in the data lake, and why is the data model standardized this way?

A. See information about the internal data structure in the Table schemas section in this deployment guide. The internal data schema is the result of working backwards from the requirements and talking to Partners with a long history of industry experience. Future use cases are possible for implementation. For information about separating the data lake into three layers, see AWS releases smart meter data analytics.

Q. Are there any other similar solutions that you are aware of, either from other cloud providers or on-premises solutions?

A. Some AWS Partners are working to adopt the Quick Start as a starting point and then build on top of it. When it comes to on-premises solutions, most utility companies have meter data and an MDM, and do some degree of analytics against the data. Very few do any form of ML against the data. Most want to do more, and do it in the cloud, to free themselves from the costs and constraints of on-premises analytics and ML.

Q. Are the ML models pre-trained?

A. No. The Quick Start contains data pipelines and notebooks for loading and training the models using your own data.

Q. What type of ML model is used for energy forcasting? Do I have to train a separate model for each meter?

A. The Quick Start uses the Amazon SageMaker DeepAR forecasting algorithm, which is a supervised learning algorithm for forecasting scalar (one-dimensional) time series using recurrent neural networks (RNN). This approach automatically produces a global model from historical data of all time series data (for example, data from all your meters) in the dataset. This allows you to have one model consumption forecasting, rather than a model for each meter. Note that this doesn’t mean one model can be used forever; you should retrain the model periodically to learn new patterns.

For more information, see DeepAR Forecasting Algorithm and DeepAR: Probabilistic forecasting with autoregressive recurrent networks.

Q. For energy forecasting, does the ML model take weather into account? If so, how do I include weather data with meter data for training?

A. Weather data is optional. See the (Optional) Prepare weather data for use in training and forecasting section in this guide for instructions on how to use weather information as part of the training pipeline, including the weather data format. The data structure that is expected for weather information is also explained.

Q. For energy forecasting, can you provide an example of how I would query the Forecast API for next week’s forecast energy usage with and without a weather forecast?

A. See the Data schema and API I/O format section in this guide for API descriptions with example requests that demonstrate usage. Also, the provided Jupyter Notebook provides example implementation usage of the API.

Q. What model is used for anomaly detection?

A. The Quick Start uses the Prophet library for anomaly detection. Prophet is a procedure for forecasting time-series data based on an additive model where nonlinear trends are fit with yearly, weekly, and daily seasonality plus holiday effects. It doesn’t support a feedback loop. You can choose to use other algorithms.

Q. Which use cases are available in the Quick Start for anomaly detection?

A. From a anomaly-detection perspective, the Quick Start supports the detection of abnormal usage (higher or lower than expected range) in a certain time period based on historic data.

Q. Has any financial information been included for any of the forecasts, for example, to forecast the most cost-effective generation source to cover peak loads and prevent brownouts or blackouts?

A. No. Currently the only forecast use case that supported is meter usage.

Q. Since meter data is time-series data, why is the Quick Start not using Amazon Timestream as a data store?

A. Timestream was not available at the time this Quick Start was published, although it will be considered for future iterations. Timestream is a good option for certain use cases such as real-time dashboards.

Customer responsibility

After you successfully deploy this Quick Start, confirm that your resources and services are updated and configured — including any required patches — to meet your security and other needs. For more information, see the AWS Shared Responsibility Model.

Parameter reference

| Unless you are customizing the Quick Start templates for your own deployment projects, keep the default settings for the parameters labeled Quick Start S3 bucket name, Quick Start S3 bucket Region, and Quick Start S3 key prefix. Changing these parameter settings automatically updates code references to point to a new Quick Start location. For more information, see the AWS Quick Start Contributor’s Guide. |

Parameters for deploying into an existing VPC

| Parameter label (name) | Default value | Description |

|---|---|---|

Include Redshift cluster resources

( |

|

Deploy Amazon Redshift consumption hub. |

Admin user name

( |

|

Administrator user name for the Amazon Redshift cluster. The user name must be lowercase, begin with a letter, contain only alphanumeric characters, '_', '+', '.', '@', or '-', and be less than 128 characters. |

Admin user password

( |

|

Administrator user password for the Amazon Redshift cluster. The password must be 8–64 characters, contain at least one uppercase letter, at least one lowercase letter, and at least one number. It can only contain ASCII characters (ASCII codes 33-126), except ' (single quotation mark), " (double quotation mark), /, \, or @. |

Amazon Redshift cluster name

( |

|

Amazon Redshift cluster name. |

Amazon Redshift database name

( |

|

Name of the Amazon Redshift database. |

| Parameter label (name) | Default value | Description |

|---|---|---|

VPC ID

( |

|

VPC ID to create the Amazon Redshift cluster. |

Subnet 1 ID

( |

|

Subnet 1 ID to create the Amazon Redshift cluster. |

Subnet 2 ID

( |

|

Subnet 2 ID to create the Amazon Redshift cluster. |

Remote access CIDR block

( |

|

CIDR block from which access to the Amazon Redshift cluster is allowed. |

| Parameter label (name) | Default value | Description |

|---|---|---|

Transformer that reads the landing-zone data

( |

|

Defines the transformer for the input data in the landing zone. The default is the transformer that works with the London test data set. |

Create landing-zone bucket

( |

|

Choose "No" only if you have an existing S3 bucket with raw meter data that you want to use. If you choose "No," you must provide a value for the landing-zone S3 bucket name ( |

Landing-zone S3 bucket

( |

|

You must provide a value if you chose "No" for the parameter CreateLandingZoneS3Bucket. Otherwise, keep this box blank. |

Special data adapters for metering conversions

( |

|

Choose |

Number of meters

( |

|

Approximate number of meters in your dataset that need to be processed by the pipeline. This is used to configure the appropriate number of Data Processing Units (DPUs) for the AWS Glue job. The default value works for sample datasets or evaluation purposes. For a production deployment with millions of meters, choose |

Include ETL aggregation workflow

( |

|

Deploy the ETL aggregation workflow. |

| Parameter label (name) | Default value | Description |

|---|---|---|

Quick Start S3 bucket name

( |

|

Name of the S3 bucket for your copy of the Quick Start assets. Keep the default name unless you are customizing the template. Changing the name updates code references to point to a new Quick Start location. This name can include numbers, lowercase letters, uppercase letters, and hyphens, but do not start or end with a hyphen (-). See https://aws-quickstart.github.io/option1.html. |

Quick Start S3 key prefix

( |

|

S3 key prefix that is used to simulate a directory for your copy of the Quick Start assets. Keep the default prefix unless you are customizing the template. Changing this prefix updates code references to point to a new Quick Start location. This prefix can include numbers, lowercase letters, uppercase letters, hyphens (-), and forward slashes (/). End with a forward slash. See https://docs.aws.amazon.com/AmazonS3/latest/dev/UsingMetadata.html and https://aws-quickstart.github.io/option1.html. |

Quick Start S3 bucket Region

( |

|

AWS Region where the Quick Start S3 bucket (QSS3BucketName) is hosted. Keep the default Region unless you are customizing the template. Changing this Region updates code references to point to a new Quick Start location. When using your own bucket, specify the Region. See https://aws-quickstart.github.io/option1.html. |

Parameters for deploying into a new VPC

| Parameter label (name) | Default value | Description |

|---|---|---|

Include Redshift cluster resources

( |

|

Deploy Amazon Redshift consumption hub. |

Administrator user name

( |

|

Administrator user name for the Amazon Redshift cluster. The user name must be lowercase, begin with a letter, contain only alphanumeric characters, '_', '+', '.', '@', or '-', and be less than 128 characters. |

Administrator user password

( |

|

Administrator user password for the Amazon Redshift cluster. The password must be 8–64 characters, contain at least one uppercase letter, at least one lowercase letter, and at least one number. It can only contain ASCII characters (ASCII codes 33–126), except ' (single quotation mark), " (double quotation mark), /, \, or @. |

Amazon Redshift cluster name

( |

|

Amazon Redshift cluster name. |

Amazon Redshift database name

( |

|

Name of the Amazon Redshift database. |

| Parameter label (name) | Default value | Description |

|---|---|---|

Availability Zones

( |

|

Availability Zones to use for the subnets in the VPC. |

VPC CIDR

( |

|

CIDR block for the VPC. |

Private subnet 1A CIDR

( |

|

CIDR block for private subnet 1A, located in Availability Zone 1. |

Private subnet 2A CIDR

( |

|

CIDR block for private subnet 2A, located in Availability Zone 2. |

Remote access CIDR block

( |

|

CIDR block from which access to the Amazon Redshift cluster is allowed. |

| Parameter label (name) | Default value | Description |

|---|---|---|

Transformer that reads the landing-zone data

( |

|

Defines the transformer for the input data in the landing zone. The default is the transformer that works with the London test data set. |

Create landing-zone bucket

( |

|

Choose |

Landing-zone S3 bucket

( |

|

You must provide a value if you chose |

Special data adapters for metering conversions

( |

|

Choose |

Number of meters

( |

|

Approximate number of meters in your dataset that need to be processed by the pipeline. This is used to configure the appropriate number of Data Processing Units (DPUs) for the AWS Glue job. The default value works for sample datasets or evaluation purposes. For a production deployment with millions of meters, choose |

Include ETL aggregation workflow

( |

|

Deploy the ETL aggregation workflow. |

| Parameter label (name) | Default value | Description |

|---|---|---|

Quick Start S3 bucket name

( |

|

Name of the S3 bucket for your copy of the Quick Start assets. Keep the default name unless you are customizing the template. Changing the name updates code references to point to a new Quick Start location. This name can include numbers, lowercase letters, uppercase letters, and hyphens, but do not start or end with a hyphen (-). See https://aws-quickstart.github.io/option1.html. |

Quick Start S3 key prefix

( |

|

S3 key prefix that is used to simulate a directory for your copy of the Quick Start assets. Keep the default prefix unless you are customizing the template. Changing this prefix updates code references to point to a new Quick Start location. This prefix can include numbers, lowercase letters, uppercase letters, hyphens (-), and forward slashes (/). End with a forward slash. See https://docs.aws.amazon.com/AmazonS3/latest/dev/UsingMetadata.html and https://aws-quickstart.github.io/option1.html. |

Quick Start S3 bucket Region

( |

|

AWS Region where the Quick Start S3 bucket (QSS3BucketName) is hosted. Keep the default Region unless you are customizing the template. Changing this Region updates code references to point to a new Quick Start location. When using your own bucket, specify the Region. See https://aws-quickstart.github.io/option1.html. |

Send us feedback

To post feedback, submit feature ideas, or report bugs, use the Issues section of the GitHub repository for this Quick Start. To submit code, see the Quick Start Contributor’s Guide.

Quick Start reference deployments

See the AWS Quick Start home page.

GitHub repository

Visit our GitHub repository to download the templates and scripts for this Quick Start, to post your comments, and to share your customizations with others.

Notices

This document is provided for informational purposes only. It represents AWS’s current product offerings and practices as of the date of issue of this document, which are subject to change without notice. Customers are responsible for making their own independent assessment of the information in this document and any use of AWS’s products or services, each of which is provided “as is” without warranty of any kind, whether expressed or implied. This document does not create any warranties, representations, contractual commitments, conditions, or assurances from AWS, its affiliates, suppliers, or licensors. The responsibilities and liabilities of AWS to its customers are controlled by AWS agreements, and this document is not part of, nor does it modify, any agreement between AWS and its customers.

The software included with this paper is licensed under the Apache License, version 2.0 (the "License"). You may not use this file except in compliance with the License. A copy of the License is located at http://aws.amazon.com/apache2.0/ or in the accompanying "license" file. This code is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either expressed or implied. See the License for specific language governing permissions and limitations.